Human Activity Recognition based on Smartphone and Wearable Sensors

[/trx_title]

In another article accepted in Elsevier’s Neurocomputing magazine, Jessica Sena presents one of her works carried out in the Smart Sense laboratory.

Sensor-based Human Activity Recognition has been used in many real-world applications providing valuable knowledge to many areas, such as human-object interaction, medical, military, and security. However, the use of such data brings two main challenges: the data heterogeneity between multiple sensors and the temporal nature of the sensor data.

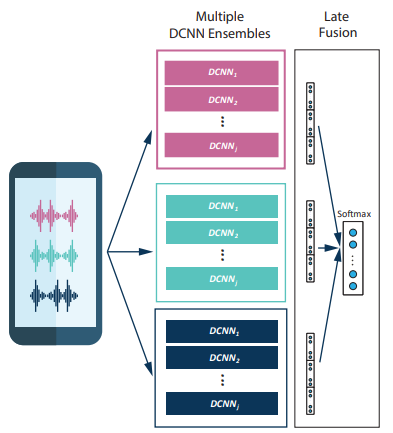

In our work, a new strategy was proposed to cope with both issues. First, we process each sensor separately, learning their features and performing the classification before fusing with the other sensors. Second, to exploit the sensor temporal nature, we extract patterns in multiple temporal scales of the data, using an ensemble of Deep Convolution Neural Networks (DCNN). This is convenient since the data are already a temporal sequence and the multiple scales extracted provide meaningful information regarding the activities performed by the users. Consequently, our approach is able to extract both simple movement patterns, such as a wrist twist when picking up a spoon and complex movements, such as the human gait. Our two strategies integrate a multimodal deep convolutional neural network ensemble which works directly on the raw sensor data, with no pre-processing, which makes it general and minimizes engineering bias.

We demonstrate its suitability for HAR on wearable sensor data by performing a thorough evaluation using seven important datasets. Our approach outperforms previous state-of-the-art results and an Inception module network adaptation used as a baseline to our convolutional kernel ensemble premise. We show that our approach achieves higher results using multiple sensors and better discriminates activities than the most common approaches. Additionally, we demonstrate the feasibility of deploying our approach on resource-limited embedded devices by evaluating our approach in a Raspberry Pi 3.